How AI Surveillance Tech is Creeping From the Southern Border Into the Rest of the Country

Border surveillance used to mean fences, floodlights, and the occasional low-flying helicopter. Now the landscape looks very different. Steel gives way to sensors. Towers watch in silence. Algorithms sort through everything. What started as a national security project in dusty stretches of West Texas now leaks into city streets, small-town police work, and everyday life. The striking part isn’t the hardware. It’s the logic: once a tool can track people at the border, someone will argue it can track people anywhere. That argument keeps winning, mostly in the dark, far from public debate or meaningful public consent.

From Blimps and Towers to Data Pipelines

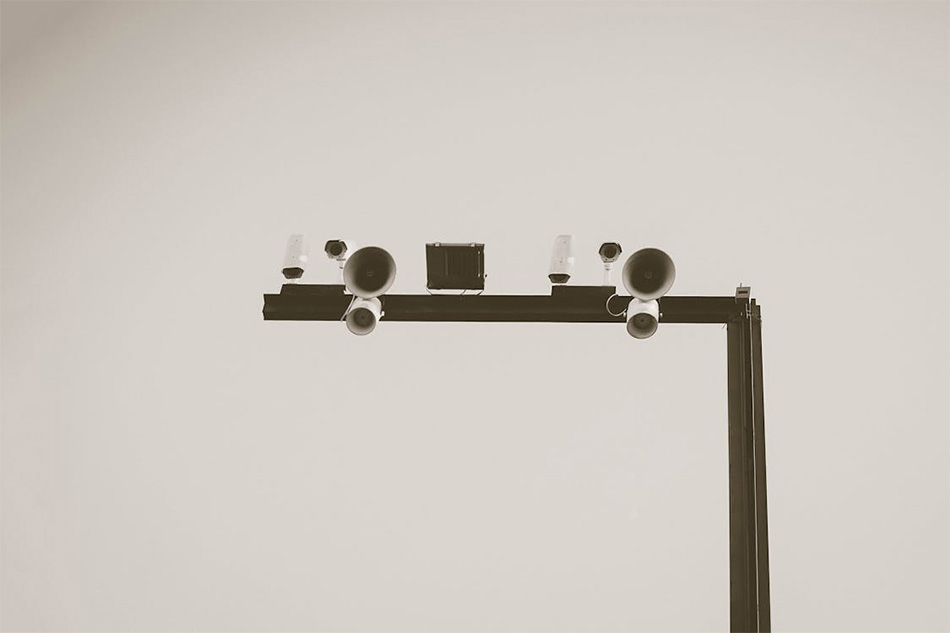

The border zone turned into a laboratory. Not of ideas, of gadgets. Tethered aerostats loom over the desert like strange, patient balloons. On the ground, “autonomous” towers bristle with cameras, radar, thermal imaging, all feeding digital streams into government systems. Officials sell this as a smarter alternative to more physical wall. Less concrete, more code. The label sounds harmless: detection, not identification. Just movement, not people. Yet those towers capture high-resolution images, store them, and fold them into databases. Once data exists at that scale, pressure grows to analyze it, re-use it, share it, and never delete it.

When ‘Border’ Stops Meaning Geography

Border enforcement no longer sits at the river or the fence line. It stretches along highways, bus stations, airports, even county jails hundreds of miles inland. AI turns that stretch into something more like a web. License plate readers track cars far from any crossing. Cellphone searches at airports mirror tactics first honed at ports of entry. The concept matters: the “border zone” becomes less a place and more a moving perimeter around certain people. Once officials treat the whole interior as fair game for border tools, the constitutional protections that look firm on paper start to feel very negotiable in practice.

Facial Recognition Jumps the Fence

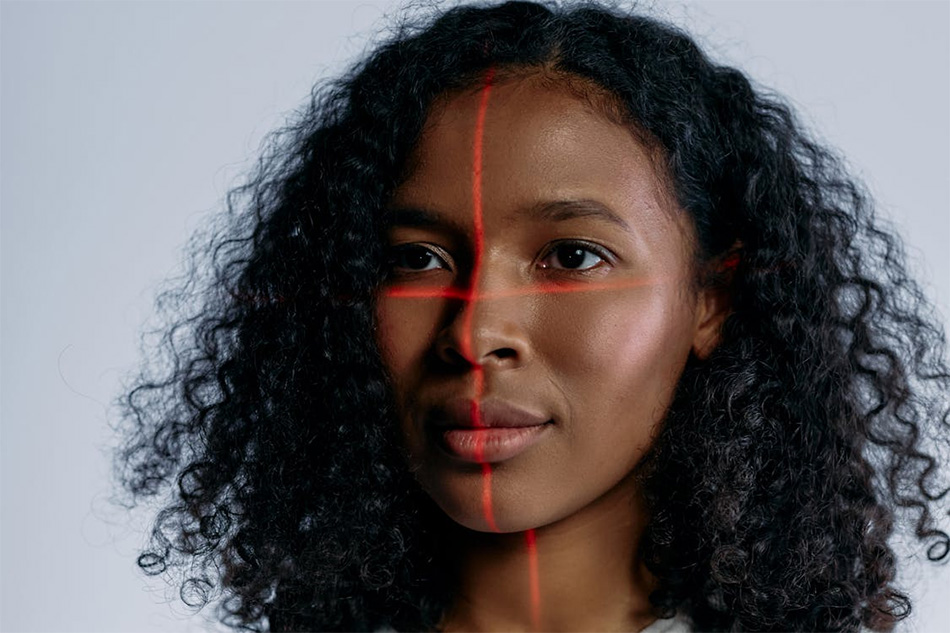

Take facial recognition. Systems first built so Customs and Border Protection could check travelers now sit in the hands of ICE agents on ordinary city streets. Mobile Fortify, for example, lets agents snap a photo and query huge databases in seconds. An agent in Minneapolis calling out a stranger’s name in public isn’t a magic trick. It’s the quiet result of years of database building and software tuning. The risk doesn’t stop at one correct match. These systems misidentify people, especially people of color, and still push agents to act with total confidence. That’s a recipe for wrongful arrests and quiet harassment.

Local Police as Federal Sensor Network

The creep doesn’t happen only through federal agents. Local police departments plug into the same tools. Traffic cameras feed license plate systems. Jail booking photos travel into shared facial recognition platforms. Data from a sheriff near the border can end up in the same pool as scans from a city thousands of miles away. Once connected, those networks act like a single nervous system. The public rarely sees the contracts, memorandums, or software demos that make it possible. Yet the result shows up in daily life: more stops driven by unseen alerts, more questioning justified by screens, not suspicion.

The story here isn’t just about technology creeping north. It’s about a policy habit: test harsh tools on people with the least power, then normalize the tools once the outrage fades. The border becomes the exception zone that slowly rewrites the rulebook for everyone. AI-driven surveillance folds into that pattern neatly, because it hides its force behind code, dashboards, and vague promises of efficiency. What this signals is simple enough. Unless the public draws a hard line on where and how these systems operate, the country will wake up inside the “smart wall” rather than standing in front of it.