AI Chips: The New Arms Race in Silicon Valley

The numbers are startling. Nvidia’s profits have soared. Not drifted upward—exploded, powered by demand for its graphics processing units. The company, now a titan, owes its dominance to the AI boom. But here’s the twist: the landscape isn’t static. Far from it. New challengers are emerging from every corner of Big Tech. Custom-built chips, field-programmable devices, on-device solutions—each with its own agenda, and each threatening to erode Nvidia’s once-unchallenged supremacy. The chip wars aren’t coming. They’re here, and the frontlines shift by the week. One clear pattern: nobody’s resting easy.

GPUs: From Gaming to AI Powerhouses

Forget the old image of GPUs as tools for gamers. That’s the past. Nvidia’s pivot from pushing pixels to powering artificial intelligence marked one of the most dramatic business transformations in recent memory. The real catalyst? The AlexNet breakthrough in 2012, a seismic event in AI history. Suddenly, the same chips that made video games look lifelike became essential for training neural networks. Now, these GPUs work alongside CPUs, not as rivals but as partners, taking on the heavy lifting in AI data centers around the globe. They excel at parallel math, making them ideal for both AI training and inference. These chips are no longer niche—they’re the general-purpose engines of the AI revolution.

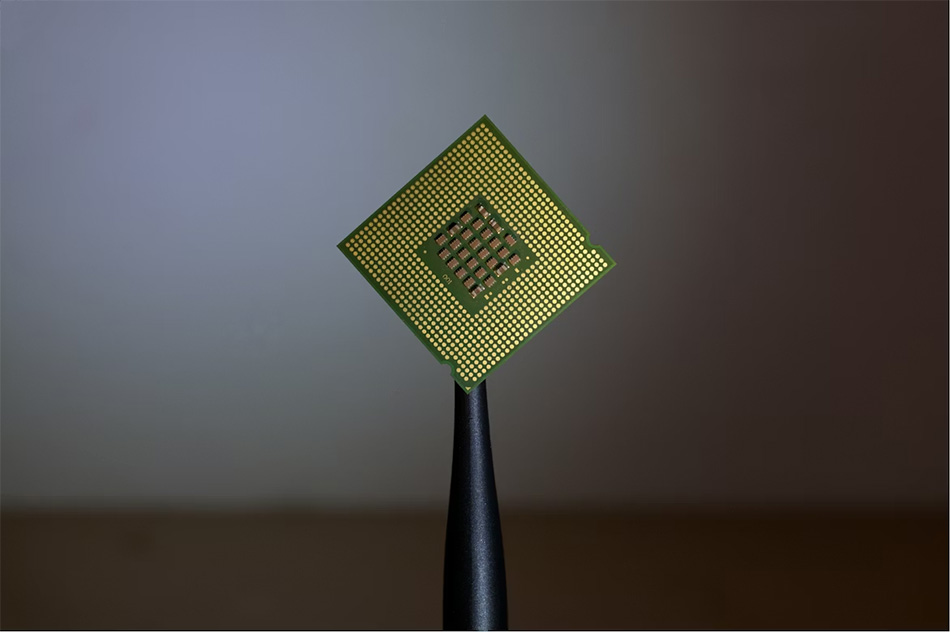

ASICs: Custom Chips, Custom Advantage

Sticking to off-the-shelf solutions? That’s not how the tech giants play the game anymore. Custom ASICs, or application-specific integrated circuits, are the new gold rush. Google built its own Tensor Processing Unit. Amazon’s Trainium is vying for attention. Even OpenAI is jumping in, with Broadcom as an ally. These aren’t minor upgrades. ASICs are smaller, more efficient, and tailored for very specific tasks. They’re cheaper to deploy at scale and, crucially, they threaten to loosen Nvidia’s grip on the AI hardware market. Industry experts like Daniel Newman say ASICs will outpace GPUs in growth. That’s not a prediction—it’s a warning shot.

FPGAs: Flexibility Over Raw Power

Enter the field-programmable gate array. Not as famous as GPUs or as hyped as ASICs, but don’t underestimate them. FPGAs are chameleons; their circuits get reconfigured by software even after manufacturing. This means they can handle a shifting set of roles, from signal processing to AI inference. Companies prize FPGAs for their adaptability, especially when needs change faster than product cycles. Yes, they might lack the brute force of GPUs in certain tasks, but their flexibility is an asset. In a world where demands and algorithms change overnight, the ability to quickly pivot matters more than ever.

On-Device AI: Power at the Edge

Cloud computing gets the headlines, but there’s a quiet revolution happening closer to the user. On-device AI chips, championed by Qualcomm and Apple, are putting intelligence directly into smartphones, laptops, and more. The benefits are obvious. Faster response times. Greater privacy. Reduced reliance on distant data centers. These chips aren’t just supporting AI—they’re enabling entirely new experiences right in the palm of a hand. As more devices gain these capabilities, expect a shift in what users demand and what companies deliver. The AI race isn’t just about the cloud; it’s about winning the edge.

Conclusion: The Battle Has Only Begun

Anyone betting on a single winner in the AI chip wars is missing the point. The market is fragmenting, and the days of one-size-fits-all solutions are over. Nvidia may sit atop the throne today, but every major player is building or buying its own hardware. Custom ASICs threaten established giants. FPGAs bring adaptability. On-device processors reimagine the user experience. No chip, no matter how advanced, can rest on its laurels. The only certainty is chaos. Companies that adapt fastest will shape the next era of artificial intelligence. The rest? They’ll scramble to catch up, if they can.