MIT’s Visual Microphone Reconstructs Sounds from Potato Chip Bag Vibrations

Cropped screenshot of the visual microphone project’s official web page (http://people.csail.mit.edu/mrub/VisualMic/)

But it’s not limited to potato chip bags. MIT researchers were able to create a visual microphone capable of analyzing and reconstructing discernible audio based on the vibrations of objects being viewed in a video. Yes, it’s a technology that listens to sounds without any audio signal involved. It’s an algorithm specifically created to recreate or reproduce sound by interpreting the vibrations of potato chip bags, plants, a chocolate snack wrapper, and various other objects being observed by a camera.

It’s the realization of the technology featured in the 2008 Shia LaBeouf movie “Eagle Eye” wherein a super AI villain was listening to conversations by analyzing the vibrations of objects on top of a table inside a soundproof room. This technology was also featured in the TV show “Agents of SHIELD.”

Visual Microphone

A team of researchers at the Massachusetts Institute of Technology with participants from Microsoft and Adobe have come up with an algorithm that can reconstruct sounds through the vibrations of objects recorded by a camera. Basically, it can pick up conversations even in a tightly sealed room. It enables plants and crisp packets to act as spying accomplices.

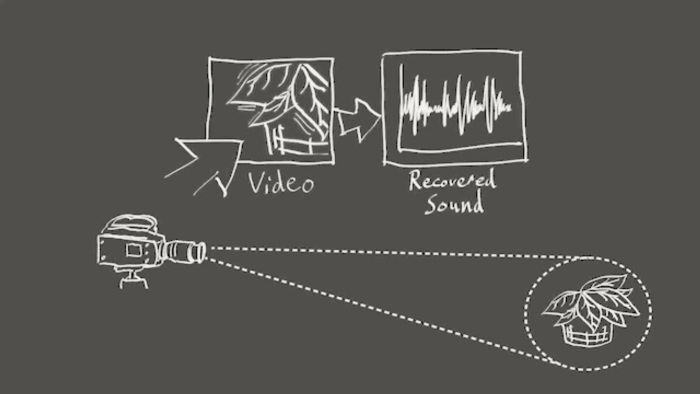

Screenshot of the visual microphone project’s info video (http://people.csail.mit.edu/mrub/VisualMic/)

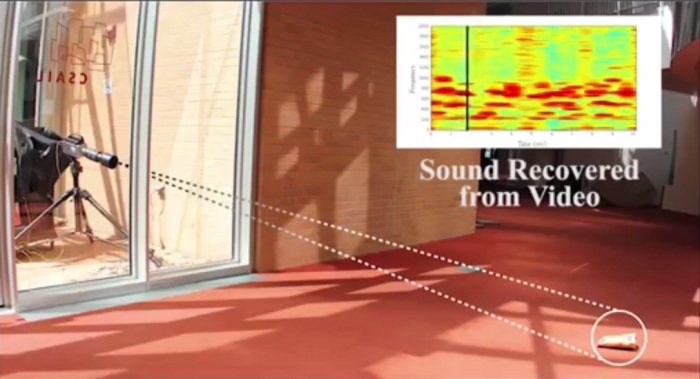

This visual microphone is capable of extracting audio from visual information, detecting and reconstructing intelligible speech through a variety of objects behind a soundproof glass that are 15 feet or 4.5 meters away from the camera. It can “listen” to what people are talking about behind a soundproof glass , through the Other items through which the camera can possibly recognize intelligible audio are the leaves of a potted indoor plants, aluminum foil wrappers, and the surface of a glass of water.

The MIT-led research team is expected to present its findings in this year’s Siggraph computer graphics conference. The research team is composed of Abe Davis, Neal Wadhwa, Fredo Durand, and William T. Freeman of MIT CSAIL; Michael Rubinstein of Microsoft Research; and Gautham Mysore of Adobe Research.

How Does It Work

As MIT electrical engineering and computer science graduate student Abe Davis explained, “when sound hits an object, it causes the object to vibrate.” This vibration generates a “very subtle visual signal that’s usually invisible to the naked eye.” To detect these visual signals, a high quality camera capable of recording extremely high frame rates will be needed. The camera should be able to record at a framerate that is higher than the frequency of the audio signal. In some of the experiments conducted by the MIT team, they used a camera capable of capturing 2,000 to 6,000 frames per second. That’s up to a hundred times faster than the capability of cameras in high end smartphones and digicams but considerably lower than the leading commercial high speed cameras available (there are those that record up to 100,000 frames per second).

The visual data obtained by the camera is then run through a specially written software with an algorithm specifically created to translate object vibrations into actual sounds. As what this video shows, the software does an impressive job translating the vibrations into actual sounds. It is capable of reproducing the tone, the distinctive voice imprint, and even the bass and treble levels.

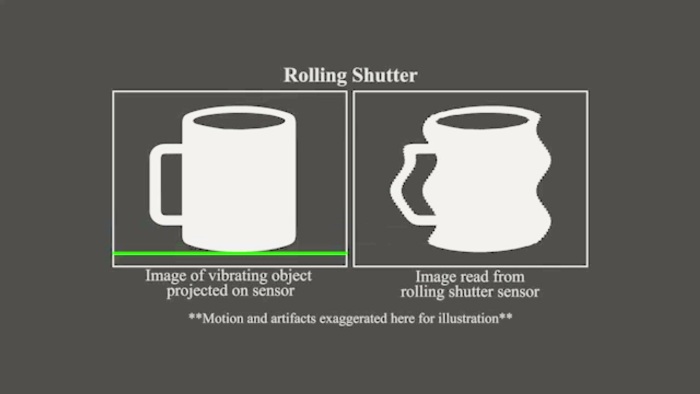

There were also experiments conducted by the team using standard digital SLR cameras that only capture videos at 60 frames per second (way below the recommended frame rate that should be higher than the sound frequency). Interestingly, the team were able to capture useful visual sound information by taking advantage of the artifacts caused by the rolling shutter effect present in most digital cameras.

Screenshot of the visual microphone project’s info video (http://people.csail.mit.edu/mrub/VisualMic/)

The software responsible for translating the vibrations into actual sounds makes use of the changes in the video at the pixel level. The software does this by making inferences in the pixel itself. The MIT team used the image of a screen with one part red and the other blue. As vibrations happen, the pixels (along the border where the blue and red colors meet) turn purple as the red and blue seemingly mix. By observing the minute changes, the software is able to assign a corresponding distinct sound to be played later on.

Video Specifications to Capture Adequate Visual Audio Data

The idea here is that the video should be clear enough and should have the adequate frame rate (higher than the sound to be decoded). Videos lower than the standard 720p resolution can still generate enough visual sound data although the resulting sound translation wouldn’t be as clear and comprehensible, especially when trying to decode different voices of people simultaneously talking.

Applications of the Technology

This technology can be very useful for law enforcers. However, it still has its limitations. For one, the camera should be somewhat close to the object to be recorded to detect a good level of visual details that will represent the vibrations. The MIT researchers did not mention anything yet about the possibility of using telescopes to extend the 15-feet camera distance recommendation but we reckon such a setup will work as long as the details captured are clear enough, with very minimal distortions caused by the magnifying lenses.

Screenshot of the visual microphone project’s info video (http://people.csail.mit.edu/mrub/VisualMic/)

For more information about this exciting technology, you can visit the project’s official website. The site presents audio files and the video of the tests the research team had undertaken. The full paper (in PDF) and a link to supplementary materials and related publications are also presented on the site.