Inclusive Speech Technology: Addressing the Challenges for African American English Speakers

Voice technology has come a long way in changing how we relate to our digital devices and has a future that promises we will seamlessly operate all manner of basic things in our day to day lives with simple voice commands. But frequently, for all the ease they bring, these systems leave some people behind. Of all speakers, however, African-American English (AAE) speakers are misunderstood and/or erroneously transcribed at particularly high rates—these cases point to serious racial disparities in the performance abilities of speech recognition technology. Addressing this is to be more than understanding the salient linguistic characteristics of AAE but, in fact, adapting technology to accommodate these differences and thereby make voice recognition systems truly inclusive.

Technology-Directed Speech: The Current Landscape

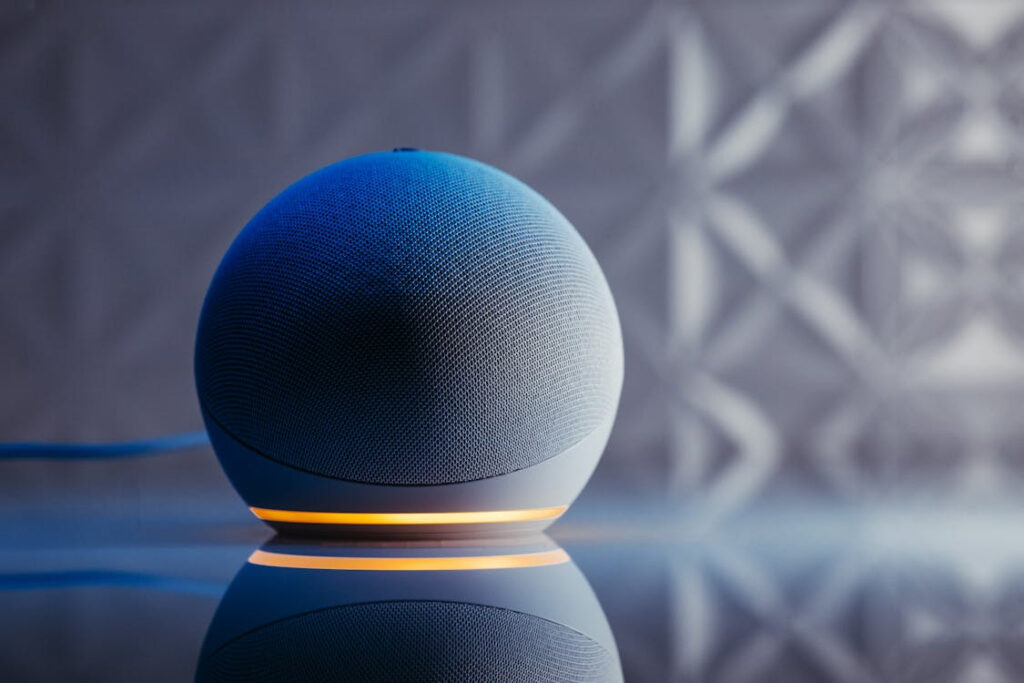

Voice technology, represented by devices like Amazon’s Alexa, Apple’s Siri, and Google Assistant, has become integral in enhancing daily productivity and efficiency. However, these systems often struggle with accurately recognizing and processing speech, especially when it diverges from mainstream American English. This phenomenon necessitates what is known as technology-directed speech, where speakers consciously alter their speech into a louder, slower, and more distinct style to accommodate the technological constraints of voice assistants. Current research predominantly focuses on these adaptations within general U.S. English, largely overlooking the diverse linguistic profiles of other speaker groups, which frequently leads to higher error rates and a diminished user experience for those outside the linguistic mainstream.

The Unique Challenges of African American English

African American English (AAE) speakers face significant hurdles with current speech recognition technologies due to the unique linguistic features of AAE, including intonation patterns, phonetic variations, and syntactic structures that differ from Standard American English. These differences often result in higher rates of misrecognition and transcription errors, which can perpetuate linguistic discrimination in essential services such as healthcare and employment. For instance, research indicates that speech recognition systems misinterpret as many as four out of every ten words spoken by Black men, highlighting a critical fairness issue within technological applications and emphasizing the need for systems that can understand and process AAE with the same accuracy as other dialects.

Adaptive Speech Patterns: Experiment and Findings

In an effort to understand how AAE speakers adapt their speech when interacting with voice technology, researchers from institutions like Google Research and Stanford University conducted experiments to compare how these individuals communicate with voice assistants versus humans (friends, family, or strangers). The study involved 19 African American adults who have previously encountered difficulties with voice recognition technology. Participants were asked to direct questions to both a voice assistant and human listeners, with their speech recorded across various conditions. The findings revealed that when speaking to voice assistants, AAE speakers significantly slowed their speech rate and reduced pitch variations, adopting a more monotone delivery—adjustments that suggest a strategic, albeit subconscious, effort to enhance comprehension by the technology.

Towards More Inclusive Speech Recognition Systems

The disparities in voice recognition accuracy underscore a pressing need for more inclusive technologies that cater to a broader range of speech patterns and dialects. The ongoing research aims to bridge this gap by expanding the linguistic diversity included in speech recognition systems, ensuring that they can effectively serve all user communities. By addressing these technological barriers, developers can better support equitable access to voice-enabled services and applications. Future efforts may also explore adaptive algorithms that learn from user interactions to improve over time, ultimately fostering a more inclusive digital ecosystem where every voice is understood.